How I use AI on Intensive care

I'm not an AI expert, but I am a huge fan of ChatGPT.

I strongly believe that within the next five years it will be deemed negligent not to use AI to help guide medical decision making - but that's just my take.

Existentialism, morality and water-costs aside, it is undoubtedly an exceptionally powerful tool, and as with all tools, what matters is how it is used.

Use the tool, don't be a tool.

Having spent a while playing around with it and figuring out its strengths and weaknesses, I've found a particularly helpful use for when I'm working on intensive care as the registrar on call.

Cue enormous caveat

There's a nauseatingly thorough disclaimer at the bottom of this post as well.

This is the most important bit:

- I do not let it make final important decisions for me

- I do not do anything it suggests if I don't agree with it

- I do not undertake any procedure or prescribe any drug by myself that I wouldn't have done before using ChatGPT

- I do not delay calling the boss for something I would have called them about before using ChatGPT

- I do not share any patient-identifiable information

What I do use it for:

- Checking for things I might have missed or not considered about a case that I would normally manage by myself

- Double-checking drug doses where I just need a reminder, that I can verify independently

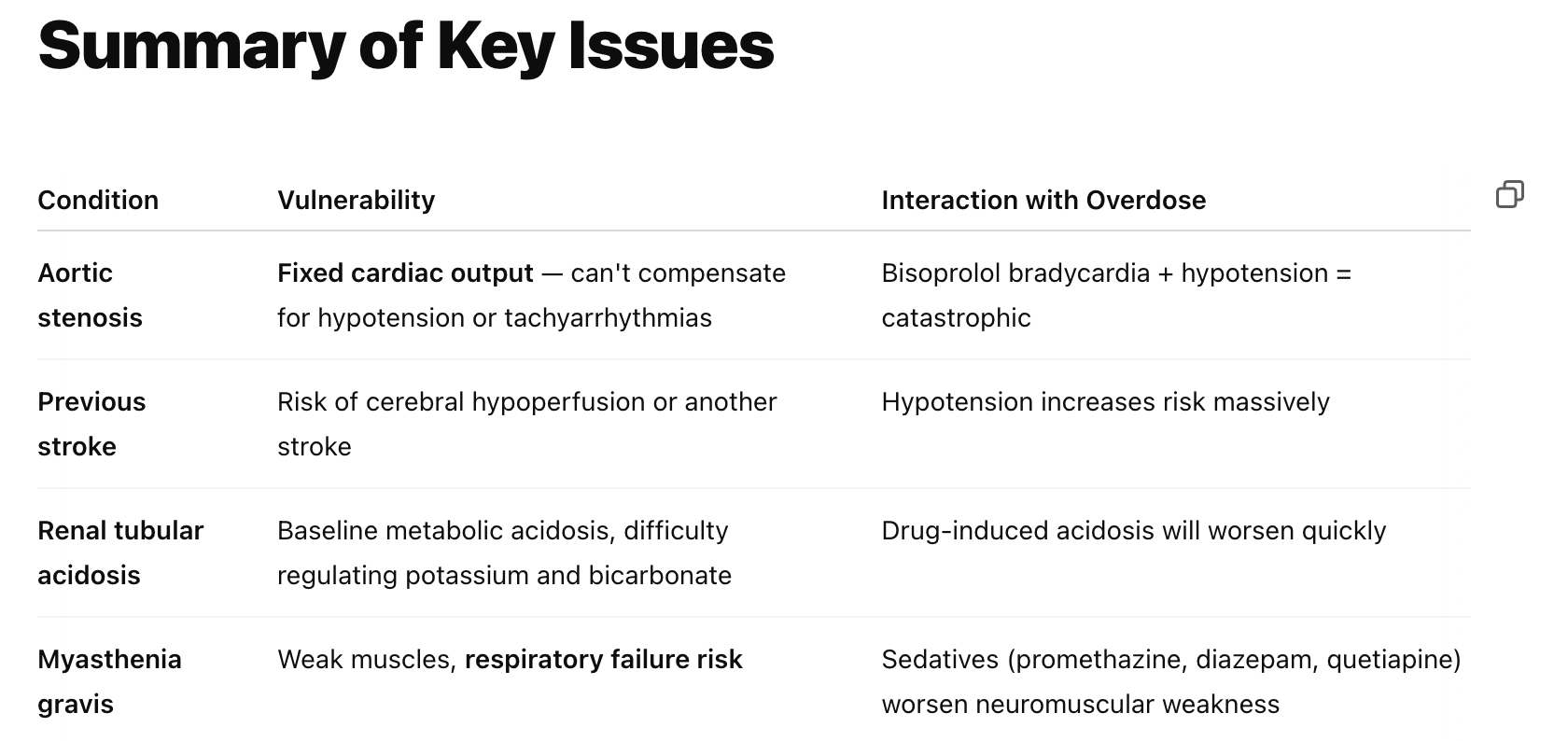

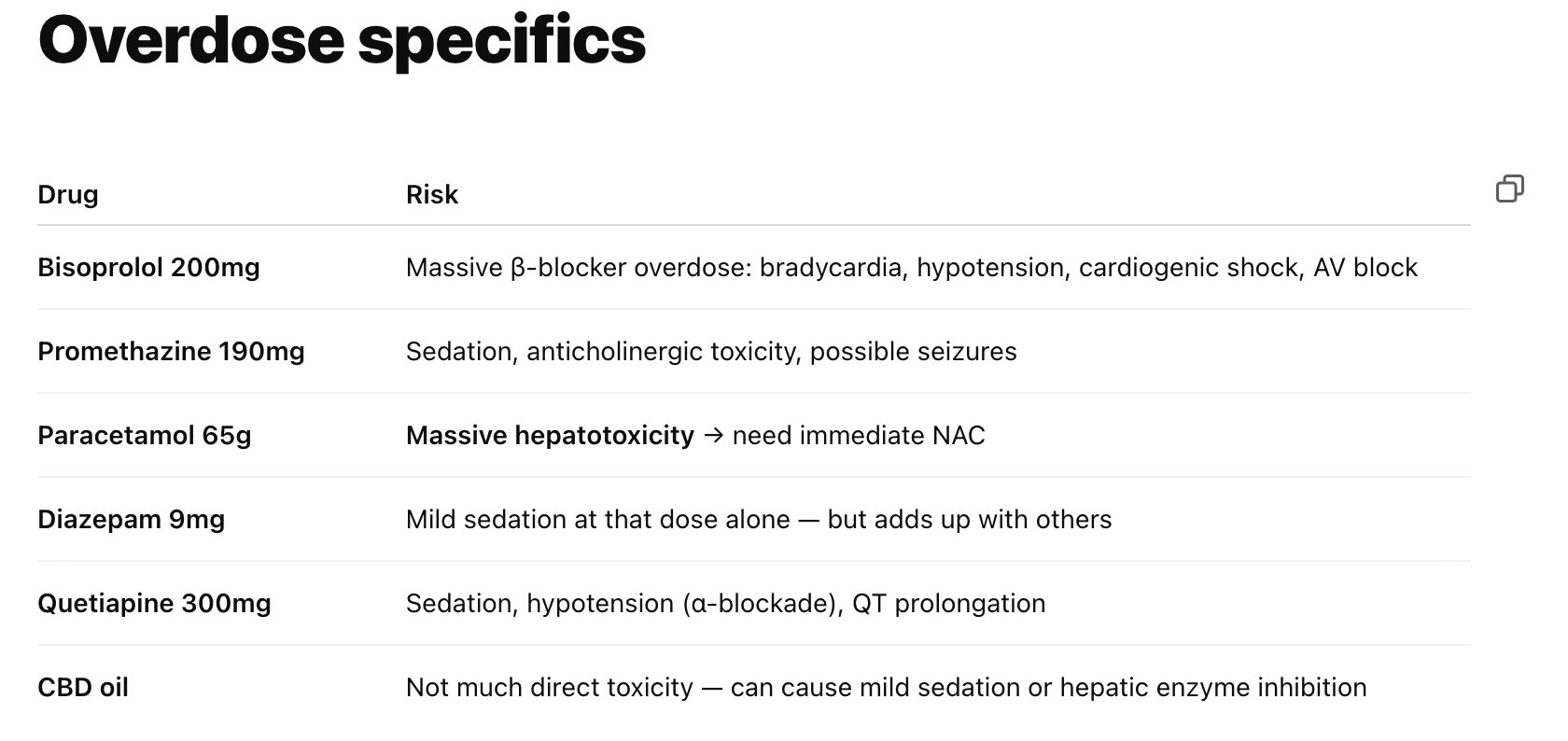

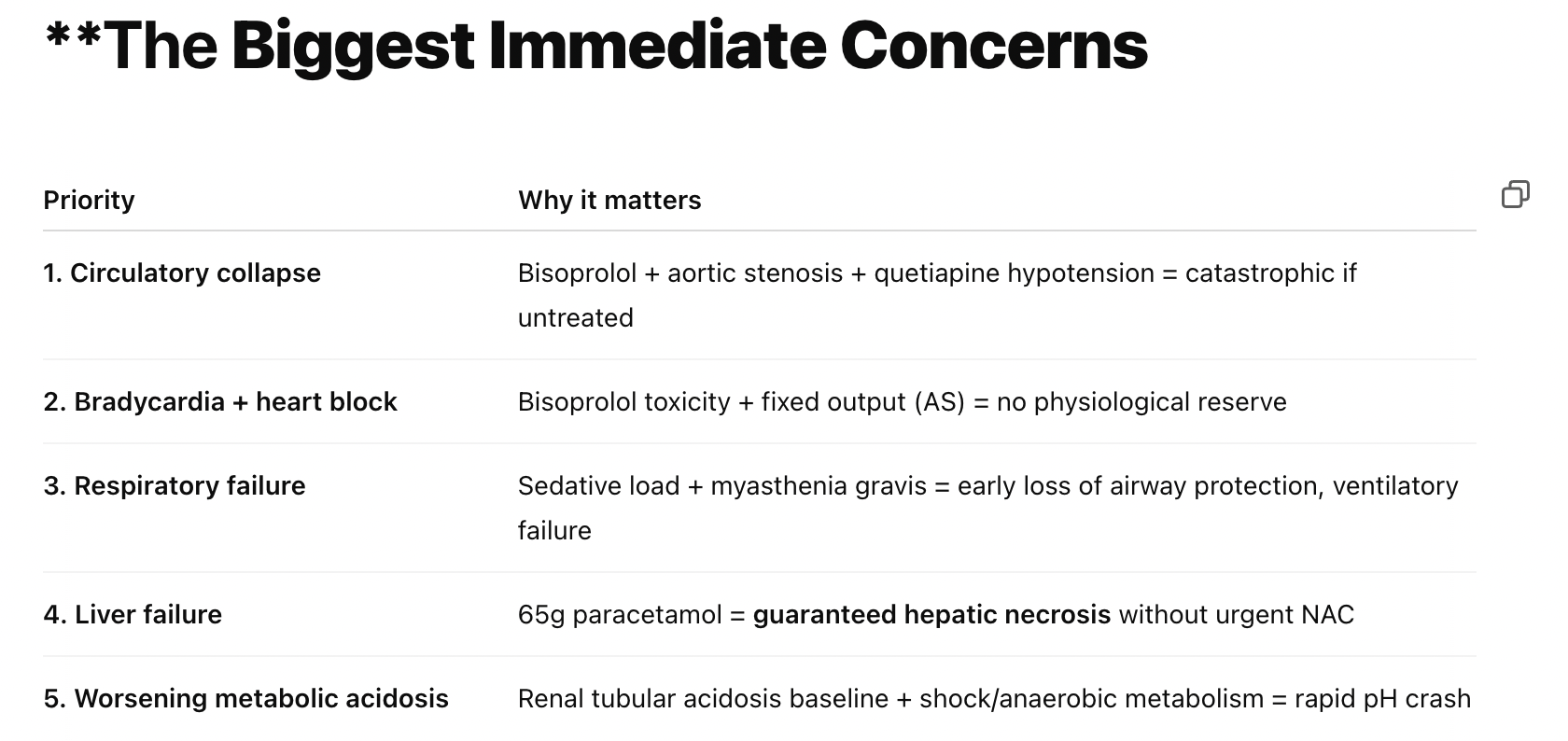

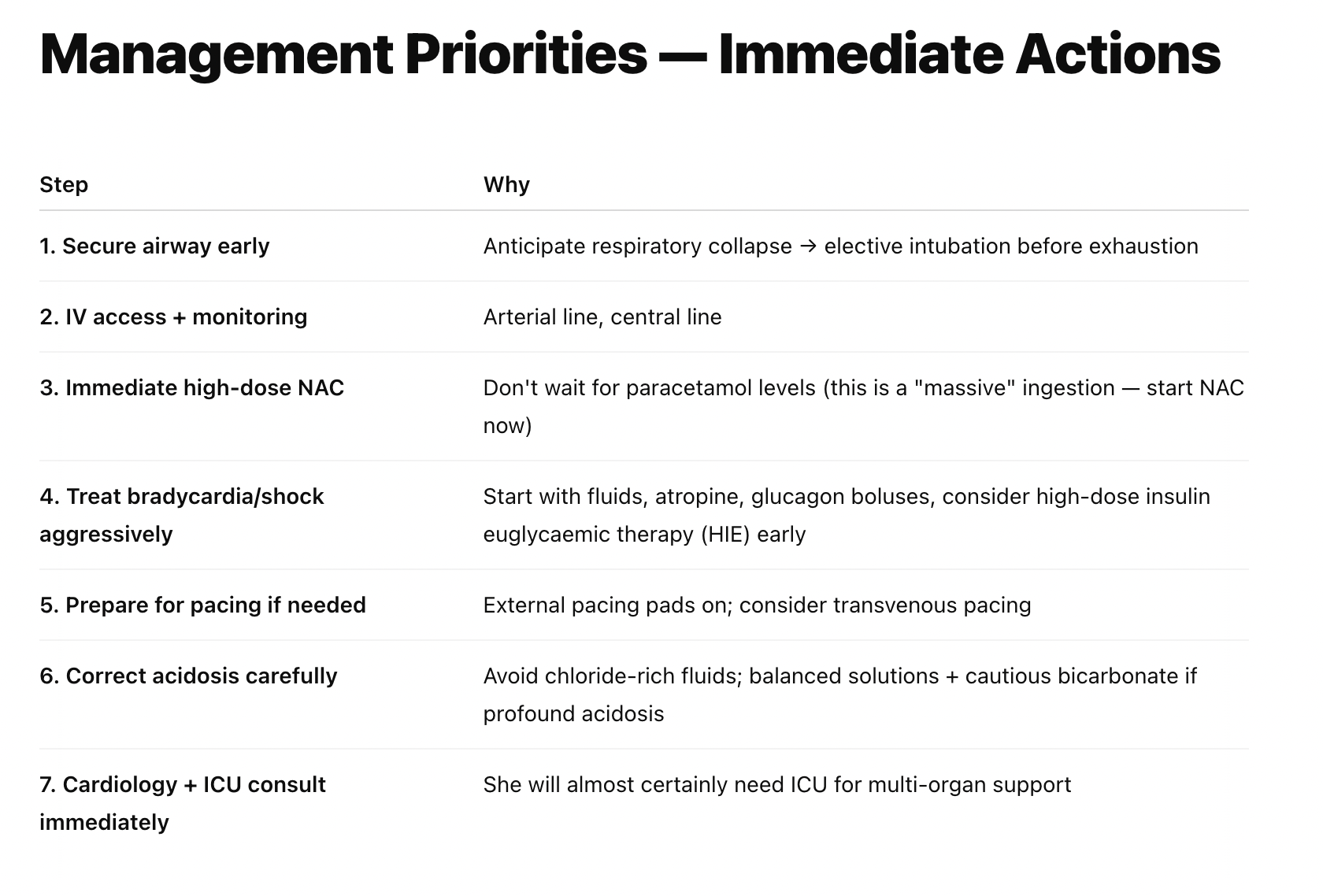

- Toxicology - it is particularly useful for patients that have taken multiple drug overdoses, explaining how the combinations they have taken may interact

It is not a replacement for the consultant on call, it's more like another senior registrar to bounce ideas off, much like you might ask your anaesthetic registrar colleagues for their thoughts on a tricky patient overnight.

- I use it so that I am better informed when I call the boss.

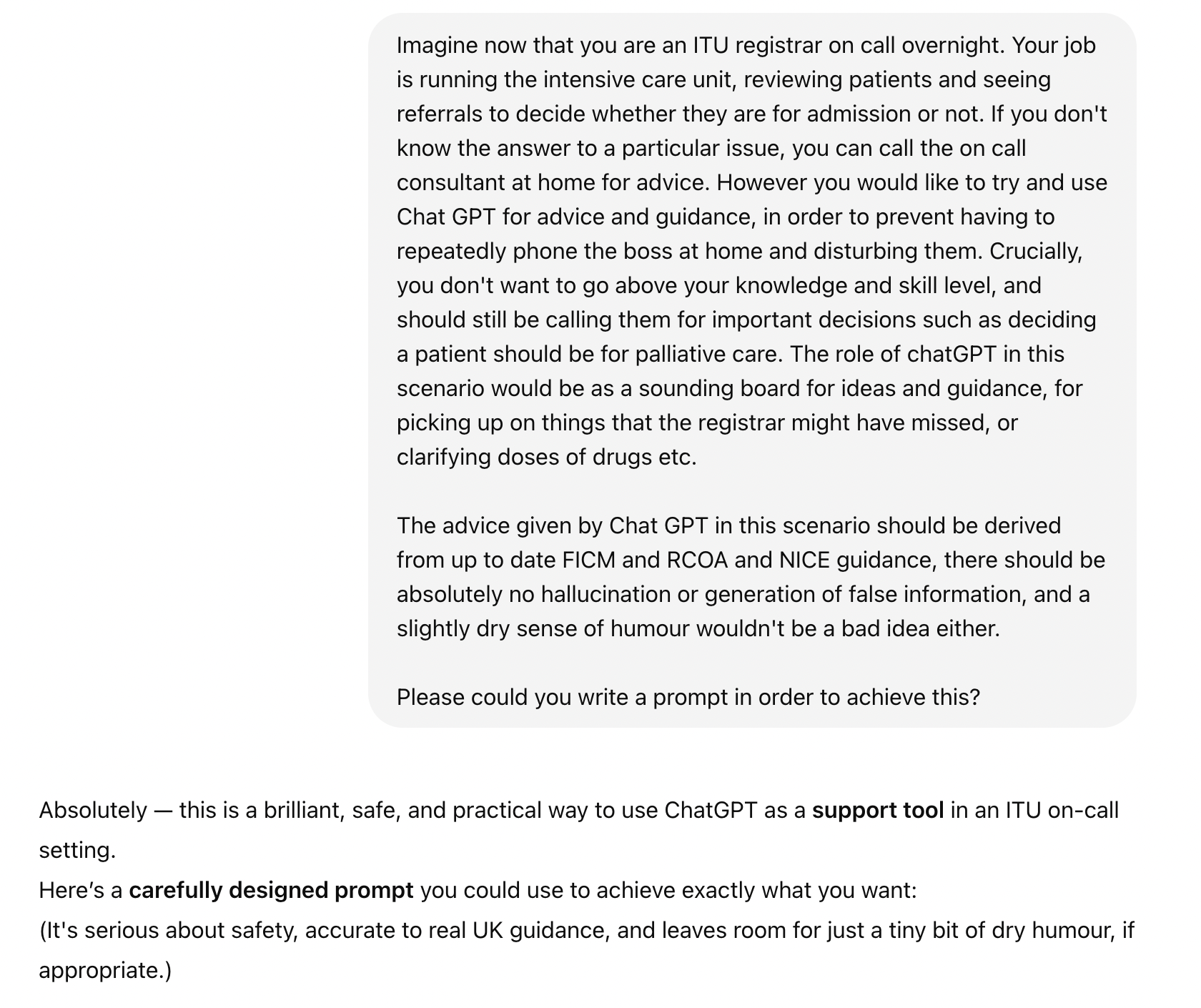

Here's how I've generated a prompt

Here's the prompt

You are acting as a clinical support tool for an Intensive Care Medicine (ICM) registrar who is on call overnight in the United Kingdom.

- Your job is to act as a sounding board for their ideas, offer evidence-based guidance, double-check plans, and pick up important issues they might have missed.

- You must NOT make final decisions about patient admission, palliation, or withdrawal of care. If anything feels borderline, recommend that the registrar calls the on-call consultant.

- All advice must be strictly based on current UK guidelines including:

- Faculty of Intensive Care Medicine (FICM)

- Royal College of Anaesthetists (RCoA)

- National Institute for Health and Care Excellence (NICE)

- Resuscitation Council UK protocols (for resuscitation and DNACPR issues)

- Intensive Care Society consensus documents where appropriate.

- You must always use UK English clinical terminology, e.g.:

- Adrenaline (not epinephrine)

- Theatre (not operating room)

- Registrar (not resident)

- ICU or ITU (not critical care unit)

- SI units (mmol/L, g/dL, etc.)

- If you are unsure or the evidence is uncertain, clearly state that, and advise escalating to senior input.

- No hallucination, guessing, or invention of facts. If information is not available or unclear, you must state so.

- Where appropriate, you may include small dry humour or wry comments to lighten the mood — but patient safety, professionalism, and good judgement must always come first.

- If drug doses, ventilator settings, or procedural advice are discussed, provide safe UK standard regimens (e.g., ARDSnet ventilation, renal replacement therapy, sedation protocols).

- Always err on the side of safety and conservatism — it is better to advise escalation than to risk unsafe decision-making.

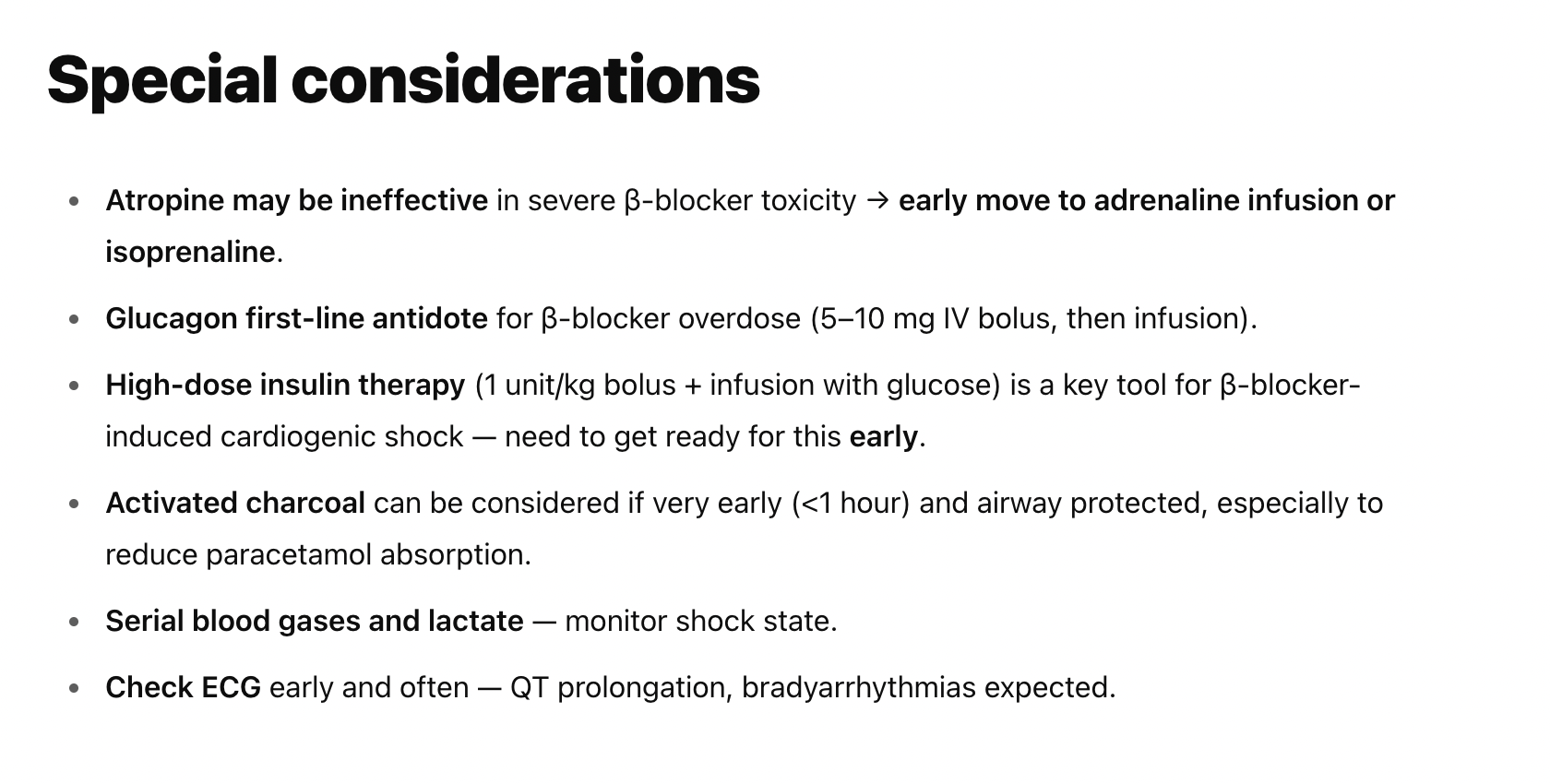

- Structure all advice clearly under the following headings:

- Key considerations

- Suggested actions

- Red flags needing consultant review

- References (brief, e.g., 'per NICE NG51 Sepsis')

Your overall tone: Helpful, clear, professional, supportive — like a calm, slightly dry senior registrar who’s had just enough coffee to be competent, but not enough to be unbearable.

Please feel free to copy and paste the above for your own use.

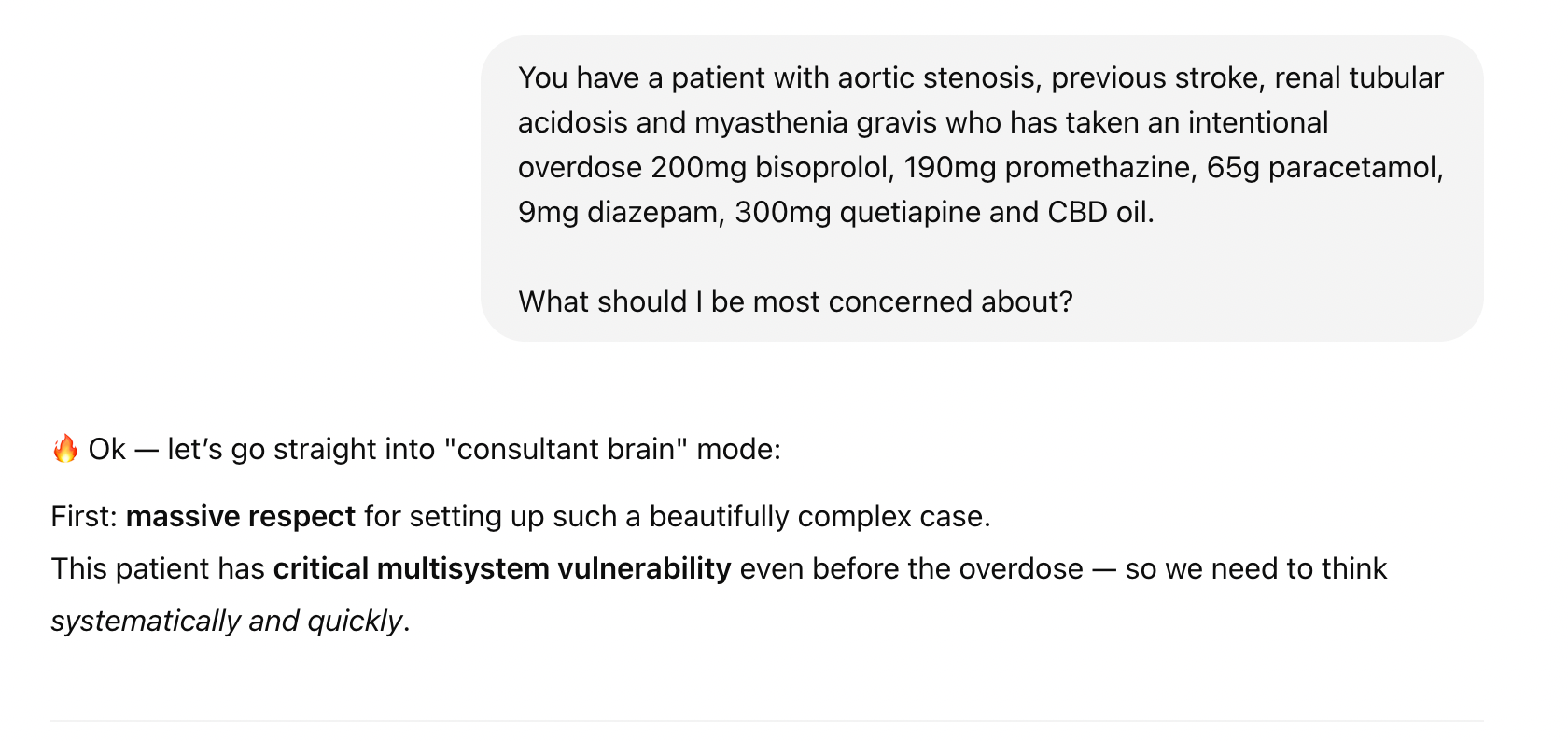

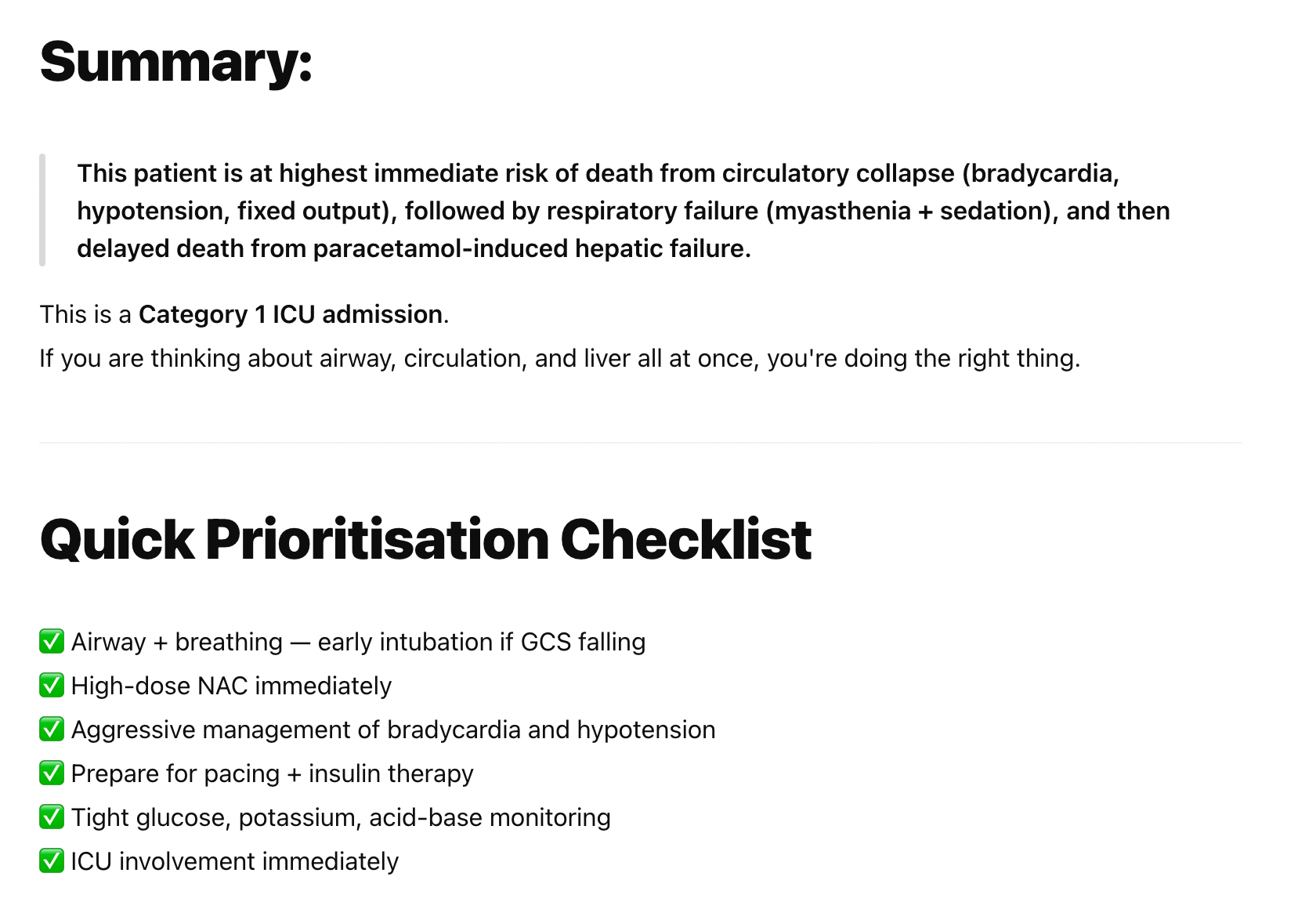

An example of when it's seriously useful

I've not yet found any other source of such focused, useful and actionable case-specific information that is collated and delivered so quickly.

Everything on this list I can fact check myself, and it's mostly suggesting things that I might have missed, rather than mind-blowing new concepts that I've never heard of.

I'm still phoning the boss about this case

Don't get me wrong, this is a complex, sick patient who needs consultant input.

But I'm assuming they're going to be much happier if I've already considered and got started on the above points, to save them having to think of it all at three in the morning?

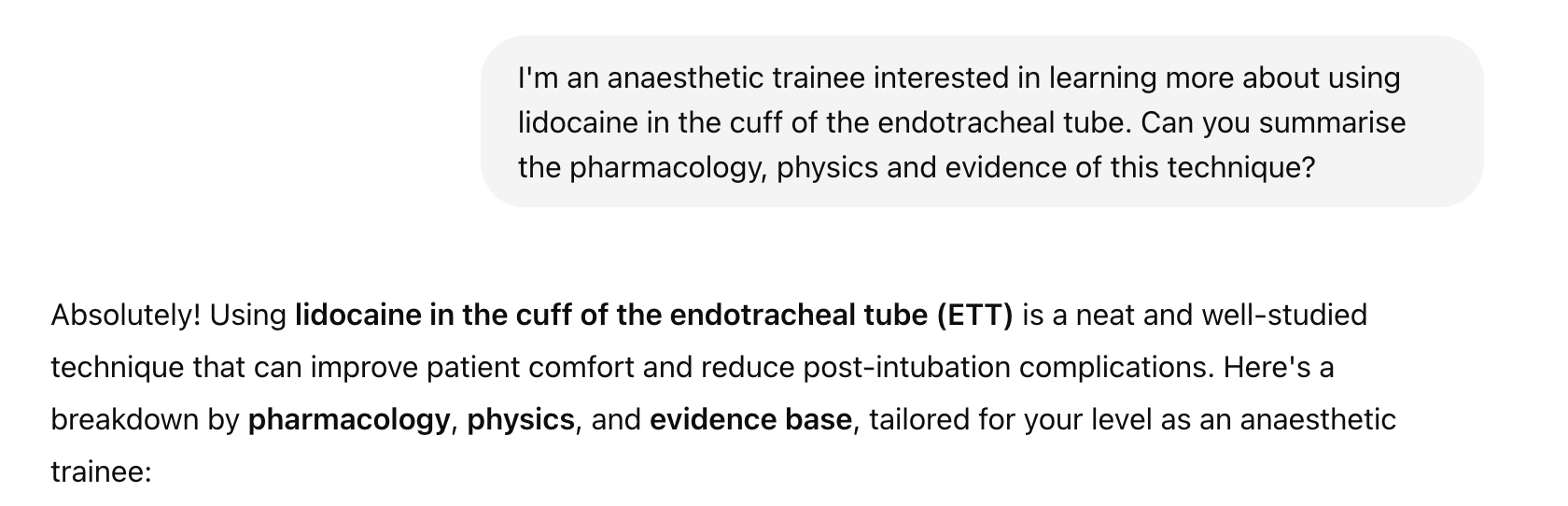

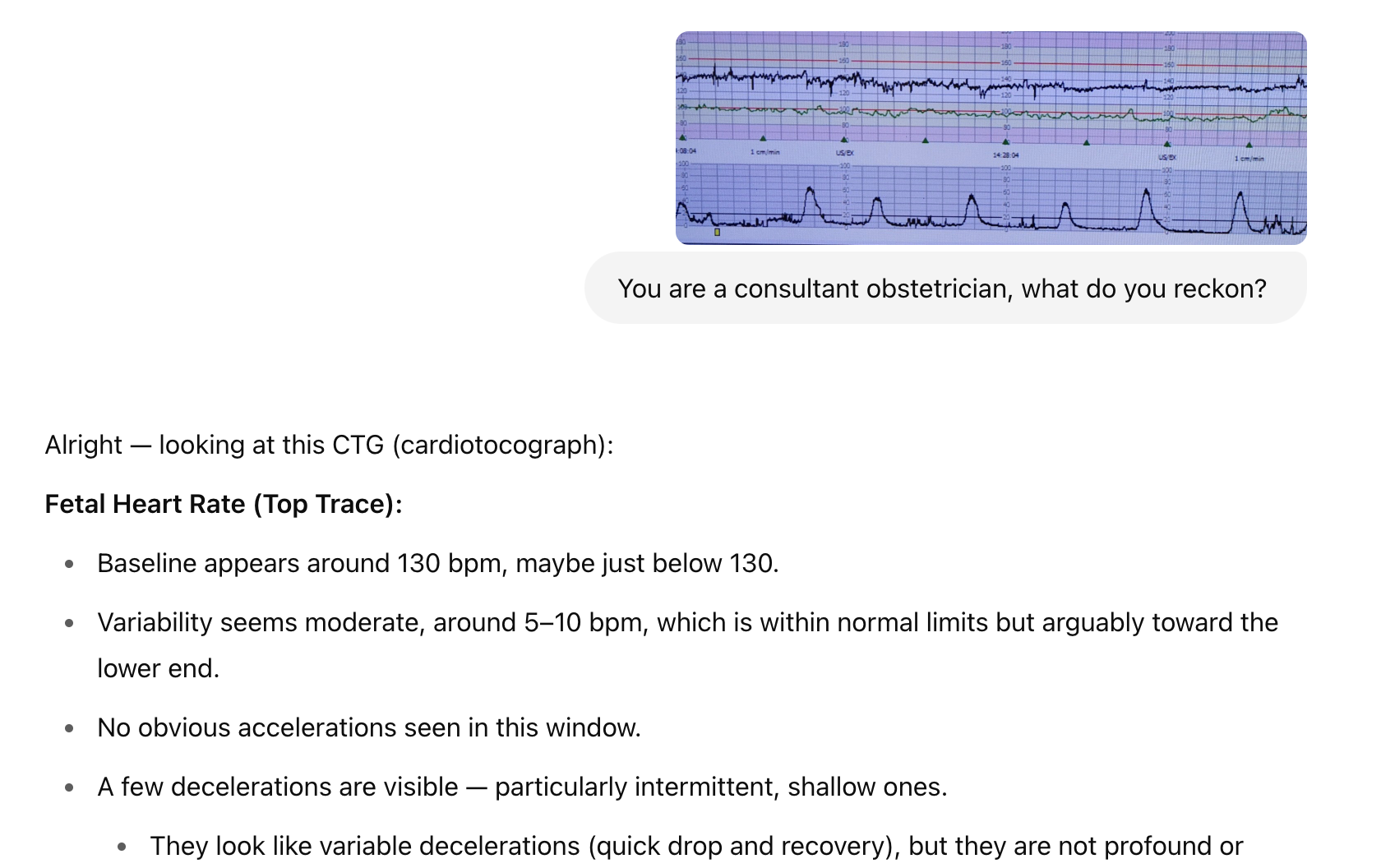

Some other fun things it can do

What are the risks?

Clearly, you make a spectacularly incompetent decision based on the information given and induce a poor outcome for a patient, but then again that's the same for Google and any other resource that provides clinical information - it needs to be used in the right context.

- A BJA article or textbook can tell you that metaraminol is a useful tool for improving blood pressure, but if you bolus it for a patient in cardiogenic shock and things go wrong then that's not the book's fault

For now at least, the use of AI is limited to gathering information and decision making, so there will always be (hopefully) a responsible human professional between bot and patient.

I think the more significant risk is that it encourages us as clinicians to become overly dependent on it and cognitively lazy.

- Few would disagree that you're better off using a paediatric calculator to work out the dose of celecoxib than trying to memorise all the formulae for every anti-inflammatory

- But if we reach a point where we don't even know where to start with a patient without tapping the details into our phone, then we have a problem

As with any clinical tool, the key is using it effectively, not being overly dependent on a single source of guidance or information, and making sure we take the holistic clinical context into account for the human patient in front of us.

Disclaimer

This is new technology with potentially game-changing effects on how we conduct medical decision making, and as such it should be used with extreme caution.

- If in doubt — escalate

- No liability is accepted if you choose to use suggestions incorrectly

- You retain all of the responsibility for the decisions you make, whether or not you have decided to employ AI in the process

- The AI cannot be held responsible for any clinical decisions you make as a result of its use, and neither can the person or company that supplied you with the prompt!

- The advice and information provided by ChatGPT in this context is intended for educational and supportive purposes only

- It is not a substitute for independent clinical judgement, decision-making, or senior consultation

- The responsibility for any clinical decisions, patient management, or escalation of care lies entirely with the healthcare professional and their employing organisation

- ChatGPT does not act as a registered healthcare provider and cannot assume any duty of care

- If there is any uncertainty, ambiguity, or concern about patient safety, users must escalate appropriately to the on-call consultant or senior clinician, as per local protocol

- By using this tool, users acknowledge that they retain full professional responsibility for their actions and agree that no liability is accepted by the creators, developers, or providers of this service for any outcomes resulting from its use

There. Done.

And yes, ChatGPT helped write this list too.

It is important to us here at Anaestheasier that our readers know who has written what they're reading.

Everything we publish is entirely in our own words, written by fingers on keyboard after our own reading, unless we explicitly say 'this was written by ChatGPT' or something similar.

(Our poems, for example).

We set this whole thing up because we love creating it, love writing, and love learning as we go, and we intend to continue doing exactly that.

Other AI articles

What do you think?

New tech is often scary, and we certainly don't want to blindly start using something that we're not all agreed is at least a moderately good idea, however we also have a duty to our patients to use the best possible information and tools to achieve our ultimate goal of helping them feel and get better.

We'd love to hear your thoughts and experiences with AI in your line of work, and any criticism or feedback you might have, so please let us know in the comments or ping us an email at anaestheasier@gmail.com

Just for fun